Preseason Activities

The family and I are big hockey fans, during a normal year we are either at PNC Arena watching the Carolina Hurricanes, watching them on T.V., or discussing the upcoming season. So when Covid-19 hit the U.S. and Canada the rest of the NHL season was cancelled. Through the summer we watched reruns of past games and kept tabs on when or if playoffs would even happen.

We were excited when the NHL announced playoffs were coming, but saddened to learn it would be done without fans. If you have never been to an NHL playoff game, GO! (Well, once we are allowed to go that is). Even if you don't care for hockey you will have a great time. The phrase "There is nothing like playoff hockey" is absolutely true and I wanted to bring that same experience to our home.

So how do we bring the playoff hockey experience to our living room?

We have a big screen t.v. to watch the game on and the family and I will definitely be cheering loudly, but what about the rest of the experience. When the Canes score there is no goal horn, no goal song, no flashing goal light, no rally towels to swing around. What about during intermissions when we want to drink an overpriced beer and snack on our favorite foods from the concession stand?

Ok so in order to pull off this experience we are under a time crunch, 2 months, to figure out quite a bit and see how far we can get to a legit stadium experience. Food and drinks can be sorted later if we get the rest of it going so let's get to the first part.

Action!Lights!Goal Horn!

I've heard of a few raspberry pi projects where fellow hockey fans created programs to pull scores automatically and displays scores or flash a light. So I knew the possibility is there but how do we put it together and expand on that.

As luck would have it I received a $100 Amazon gift card as a gift. So now I knew what I wanted to do was possible, I had the desire to do it, and now I've received funds to make it possible. Let's get to work!

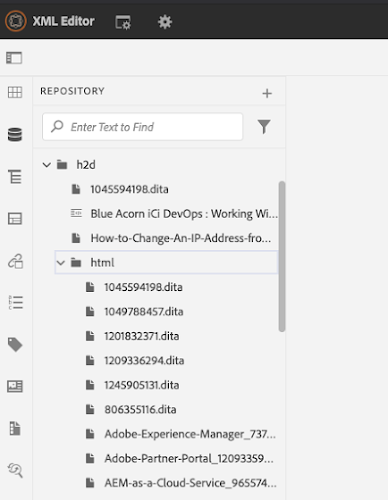

I've always wanted to get a raspberry pi to play around with and now I have an excuse. Checking Amazon pricing I could get a

Canakit Raspberry Pi 3B+ Starter kit that has everything I'll need to get started for just under $100. Sure, I could have paid a bit more and got a Pi4, but the 3B+ will have plenty of processing power and features to do exactly what I want and more.

So while we await for our Amazon delivery on to the next problem, where do we get the scores from so that we can make this into a completely automated system.

SCORE!

Or should I says scores. After much googling around, I found there are quite a few 'real time' sports API sites, but they all want $$ and there is no guarantee the scores are truly live or that they have NHL scores. I mean, even when watching the game on t.v. there is a delay from when the action actually occurred and when it was broadcasted. To add to that we will be watching the games on YouTube TV which is a streaming service, so it is a bit of an unknown how real time we will be seeing things.

Further googling I found some documentation on the NHL's APIs that don't require signing up for a service or an API key to access! Score! You can check these out

here.

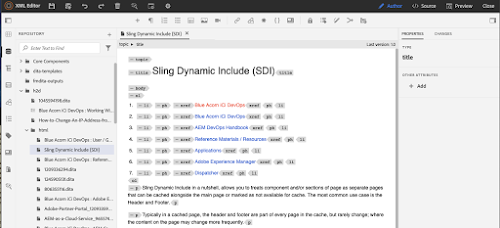

Now for some test runs of the APIs to get an idea of what information we will get back. We will use

Postman to make the API calls and verify the information. If you've never used Postman before, its a great tool for testing APIs and is free for individual developers. While this is a manual step in the process, this will help us identify which APIs we want, what data we will get back and the expected format so when we write the actual application we know which APIs to use.

A note on calling APIs

Some of these APIs return quite a bit of information, there are APIs for getting team info, schedules, player stats, team stats, game scores, etc. You always want to pick the APIs for use that return just the data you need so you save on bandwidth and processing. I mean if you simply want to know the score of a particular game, then you don't want to pull all game scores with expanded player stats, you would want to call the API for that game and keep it simplified.

You also want to ensure you aren't calling the APIs every millisecond. It may seem like a good idea and think you are getting the most up-to-date information, but more than likely your calls will be seen as a DoS attack and your IP will get banned real fast.

Under Further Review

Ok, lets make some calls and validate what we want. If you have taken a minute to look at the Teams APIs, you'll notice everything uses a teamId. This is an internal id that identifies each team's data. So let's find out what Carolina's teamId is.

NOTE: If your not a Carolina fan, you can use the same calls just substitute your team's id where we use Carolina's.

Our first call is to get all the NHL teams:

As you can see in the screenshot above, we make a HTTP GET call to https://statsapi.web.nhl.com/api/v1/teams to get all the teams. Scrolling down through the list or doing a find we find Carolina's info around line 390. There is a lot of info on the team here, but the part we are interested in is the line with 'id: 12' . The number '12' is what we will use in future calls to get Carolina's data.

To test this we can add '?teamId=12' to the same API call as before to get just Carolina's team data.

Success!

When we write our program we can just use '12' as the teamId variable so we will just make note of it, it shouldn't ever change so there is no need to constantly look it up.

Is Aho Playing Tonight?

In order to get the live scores on the day of the game we will need to know the gameId ahead of time. The gameId is much like the teamId in that it is a unique identifier so that we can get data about just the game we are interested in. We can reasonably expect this gameId to be different for each game the Hurricanes are playing in. So our program will need to first pull the schedule and get the gameId for the Carolina game.

If we look at the schedule API

documentation, there are quite a few parameters we can use to get to the data we want. We will use the variables teamId=12 and date=currentDate to see if there is a game and if so get its id. As a test I know the published schedule shows one for the 14th so we will use that date to see what we get back.

If we scroll through the results there is some info on the teams playing, venue the event is held at, etc. The part we are interested in is the gamePk, that is the unique identifier for this game, they also give us a nice link to the live feed.

If our team wasn't playing on that day we would get an empty array back for the dates field. So now we can poll the schedule once a day to find out if our team plays, if they do we can get the time from the schedule and beginning at that time start polling the live feed more frequently for scores.

Before we start calling the live feed, which can return over 30k lines of data and includes all the plays, etc. We will want to take a look at what is available from the

docs and choose the call that best fits our needs.

There is boxscore and linescore that returns far less data but still more than what we need. If you still want to use the live feed, I would suggest taking a look at the diffPatch option. This allows you to give a date/time parameter and the feed will return ALL changes since that date/time.

Game Delay

Meh. So after doing some testing the APIs are only updated every minute when there is an update. Although hockey is usually a low scoring game it is very fast paced and a 3 point lead can be lost in a minute of game play. So knowing my APIs will be at most 1 minute off, and the unknown of how delayed my streaming service is going to be at any given moment I need a better solution so that everything is in synch.

No one wants to watch their team score then 3 minutes later the goal horn plays or vice versa. To get the full experience you want to see the play, hear the goal horn followed by the goal song. I could do a few trial runs and put a delay in to synch the t.v. with the app, but streaming services are at the mercy of your Internet connection and could randomly fall further behind or buffer and catch up.

A Manual Intervention

The only way to keep everything in synch is to perform some sort of manual task or execution of the program. That way when I see the action I can execute my program and get the experience I want.

But what if I'm not in the room when they score, also I don't really want to lug my laptop around the house just to run this program. I mean the program will be executed from my pi and I don't want to fumble with ssh sessions or try to educate my family on how to execute programs from a command line. If this is a manual task it should be simple to do and execute immediately so that the overall hockey experience isn't lost.

I just remembered, I have an old tablet just sitting in the closet. We acquired it some time ago when everyone had one of those deals "Add a new line and get a free Android tablet". We used the tablet for a brief time period, then it was only used when traveling, and finally just went to the closet.

Time For Some Web Work

Ok, so I think we finally have our solution that we will build in part 2 of this blog. I'll create a web application that runs on the pi, we can use the tablet to access since all we should need is a basic browser it doesn't matter which one we use or how old it is. Our web app will at a minimum display a button that when clicked will play our team's goal horn followed by the goal song.

If you aren't familiar, every NHL team has their own goal horn and goal song.

Here is the one for the Carolina Hurricanes that I'll be putting together.

NOTE: I'm not using YouTube or anything off of it, I'm only referencing it here to give you an idea of the sound I'll be playing.

Oh crap, the raspberry pi doesn't have a speaker.

Oh wait! back to the closet of lost tech toys. I got it, we have an old

Altec Lansing bluetooth speaker that no one uses anymore. It was one of those purchases we could take to the pool, yes its waterproof, to play music from our phones while at the pool. It too got used for awhile before it was sent to the closet.

Ok so we are still on track. When someone clicks a button on our webapp from the tablet, the pi will play the goal horn followed by the goal song and turn a goal light on.

So searching Amazon I decided to get

this light. Its cheap, simple and will give me the effect I'm looking for.